VMware vROps - When and how to scale your monitoring solution?

This post was inspired by numerous vROps sizing consults I have done over the past year, and my good friend Max Drury, whose vROps Dynamic Thresholds calculations were taking over 24 hours which rendered the entire solution very sluggish and almost unusable. He wanted to add another node to his vROps cluster, but after closer analysis, it turned out he would be better off scaling-up his nodes by adding more CPU and memory. His two small nodes simply were not large enough to handle the additional number of objects and metrics collected as his environment grew over the course of a year. The number of users using the vROps solution has not changed, so there was no need to add any additional nodes. Simply bumping the CPU and memory up to the medium size on both nodes was enough to resolve both issues, DT calculation time and slowness. One key factor in Max's case that was impacting the solution was that the initial cluster size he deployed was for a much smaller environment. With time, the number of collected objects and metrics grew and Dynamic Thresholds had to chew through 12 months of accumulated data each night. The conclusion is that it is very important to keep an eye on the number of objects and metrics collected by each adapter as it directly correlates to the node size.

If you look at the vROps Sizing Guidelines KB 2093783 you can see the recommended number of objects and metrics per node on the Overall Scaling worksheet. If any of your nodes go over the recommended number of objects for their size, you may run into intermittent problems and you should bump up the compute resources to the next size. (Adding another node in this case does not help, as the existing node size is not powerful enough to handle the load already.) Changing the compute resources to the next recommended size is very important. Do not choose in-between sizes, as they will not have any effect on the cluster performance and will only waste resources. This is due to the fact that there is a script that runs every time you start the appliances that sets the JVM heap size based on the configured CPU and Memory. However, if the CPU and memory size do not meet the predefined sizes, the script will just default to the highest standard setting possible with allocated resources. For example: if you set CPU to 6 and memory to 24GB (between small and medium), the script will still configure the JVM as if it was a small node and only take advantage of 16GB of memory. This in effect will negate any benefits of adding additional 8GB and not improve vROps performance. So just bump it up to the next supported size.

UPDATE 10/28/2015: The above mentioned script that sets the JVM heap size only considers the Memory size. So setting the CPU size beyond the standard vROps sizes can have positive perormance inmpact.

One thing you will notice about the number of supported objects, especially in a large vSphere environment, is that if you're collecting over 5,000 objects per node, then you have to go with a large node size. Since a large node has 16 vCPUs, this can introduce a performance penalty for crossing NUMA node architecture. If your servers aren't fitted with 16 core chipsets, then you may be hesitant to go to a large node size. What I typically recommend in such cases is deploying a local Remote Collector to front the vCenter with a large number of objects. This Remote Collector buffer benefits the solution in two ways. First, it will allow you to keep the medium size nodes in your cluster for a while longer before having to go to the large size. Second, it will relieve the metric collection pressure from the analytics cluster by offloading it to the Remote Collector. The Remote Collector will normalize the metric data and present it to the analytics cluster ready for storing. Sometimes this even has to be done with large nodes already deployed if vCenter has over 10,000 objects. So Remote Collectors are not just intended for remote datacenters; leveraging them for local use will allow you to push the limits of vROps while maintaining a solution's performance.

Typical vROps solution with Remote Collector:

vROPs solution leveraging local Remote Collector:

Keep in mind that the above sample designs are merely guidelines, there are dozens of permutations that are possible. The key takeaways should be meeting your business requirements and simplicity, but those are topics for another post.

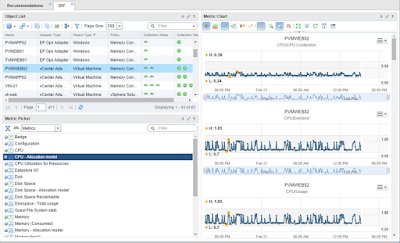

So when should you scale-out the vROps analytics cluster by adding more nodes? There are two good use cases for adding more nodes to your vROps solution. The first one involves empowering more users with access to their metrics. As I mentioned in the VMware vROps - Application VMs Performance Dashboard post, when you open up the dashboards to application analysts, the popularity may put a lot more demand on resources than what was needed for a small virtualization team in the past. Although when vROps 6.0 came out, VMware was stating that only 4 users per node were supported. That statement omitted one important fact, however. It was 4 users under the highest load possible, so under moderate load, the number of users was significantly higher. Unfortunately, figuring out what the optimal number of users per node is in your environment is not an exact science. There are many factors that can affect the user ratio, such as the number of dashboards and widgets being rendered by other users, number of reports being generated, DT calculations, capacity calculations, collection interval and analytics performed on newly collected metrics, just to name a few. Long story short, if the number of users has increased significantly and you see a noticeable performance decline, then it's probably time to add more nodes, provided the number of objects per node has not crossed one of the thresholds. If so, you may have to do both. The other use case for adding more nodes to the analytics cluster involves a significant increase in the number of collected objects and metrics. For example: you added another vCenter or two with significant number of objects, or you installed a new Management Pack that will be collecting a large number of objects. If adding an additional data source (vCenter, management pack, etc.) also puts any node over its recommended object threshold, then you may need to increase the compute resources as well, unless you elect to use a local Remote Collector as mentioned before. Keep in mind that some MPs require a dedicated Remote Collector, as is the case with Hyperic.

In conclusion, be smart about which way you scale your vROps solution. Collect and consider as many facts as possible to make an educated guess rather than a shot in the dark. It will help you keep the solution healthy with low overhead. When in doubt, try increasing the compute resources first; it's easy and you can always scale back quickly if you did not achieve the desired results. Adding more nodes is always more labor intensive and removing them when not needed has its own set of considerations. However, don't just assume that adding more compute resources will solve your Dynamic Threshold calculation issues. As mentioned earlier, DTs take into consideration all of the metric data in your system. If your retention policy is very long (6 months by default) or you increased the metric collection interval frequency (5 minutes by default), then this will impact how long DT calculation will take significantly. In such cases, it may not be enough to just increase the compute resources, you may also need to spread the workload across additional nodes and/or reduce the retention policy as well as decrease the collection interval. Sometimes database issues can also affect DT calculations, so adding resources and/or nodes will not have a significant impact and you will have to contact VMware Technical Support to troubleshoot. Lastly, don't forget to revisit the vROps Sizing Calculator when making significant changes to your solution. It's not just intended for the initial build. You can always add more objects and metrics to the calculator as your solution grows, for a sanity check.

I hope this helped to demystify the vROps scaling and sizing process a bit. Thank you for reading!

Books:

VMware vRealize Operations Managers Essentials by Matthew Steiner

Mastering vRealize Operations Manager by Scott NorrisVMware vRealize Operations Manager Capacity and Performance Management by Iwan 'e1' Rahabok

Official VMware:

VMware Professional Services

Official vROps Documentation

VMware Operations Management White Papers

Extensibility and Management Packs

vROps product page

Blogs:

vXpress by @Sunny_Dua

virtual red dot by @e1_ang

Virtualise Me by @auScottNorris

Elastic Sky Labs by @JAGaudreau

i'm all vIRTUAL by @LiorKamrat

Comments

Post a Comment